I need some basic documentation for the web app. Here are the things that would be helpful to get started:

-

Controls->Move: Define those button’s. At least with a tool tip. Just from their layout its not clear which is the related to each axis. From fiddling around, it seems that the < and > are for x. The ^ and v that are between the “x”'s controls y and the ^ and V that are to the right of the x controls is for z. At this time, I have no idea what the “home” button is for.

-

Controls->Peripherals. What’s it for?

-

Device -> Farmware. Nothing is shown there. Is that a problem?

-

Device -> Device. Auto updates doesn’t seem to work. The camera drop down says “None” but I have a camera on the bot?

-

Device -> Weed detector. Help has all of two words -> “Detect Weeds”. I have no idea what to do with this section. It does, however show some images from my farmbot.

-

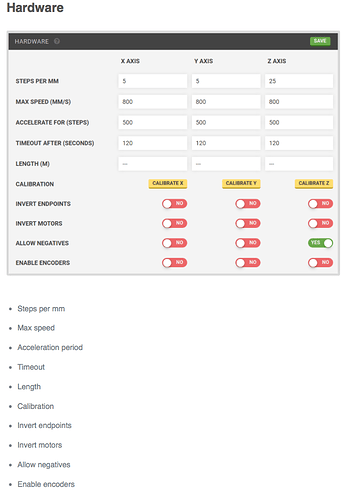

Device -> Hardware. This seems fairly important but I have no idea how to “calibrate” any of the axis. What are endstops and should they be enabled? Should the encoders be enabled? I’m pretty sure nothing interesting will be possible till I get the bot calibrated and homed. I operate CNC machines all the time. Most of my machines have hall effect or optical homing switches. The machines power up and get homed to establish “machine” coordinates, then your off to running g-code in program coordinates after you set a ‘work offset’ to allow translating from machine to work coordinates. There doesn’t seem to be any theory of operation or description about how the coordinate spaces are supposed to work or how one goes about homing a system that doesn’t have any stops.

-

Tools -> Tools. I assume you want every customer to enter the basic tools that you ship with every robot since that list is empty? Or is this for “other” tools that didn’t come with the system?

-

Tools -> Toolbay1. Seems like you need to add tools to the toolbar, but none are found in the dropdown. Also, you need to somehow figure out the x, y and z location of each tool? How do you configure the second tool bay?

(

(